The Modern AI Coding Revolution

AI-first coding isn’t a nice-to-have anymore—it’s rapidly becoming the default way software gets built. This post breaks down what’s changing inside product teams, why adoption faces resistance, and how founders and PMs can use AI to prototype faster, shift bottlenecks upstream, and ship at a fundamentally new pace.

I don’t think most founders fully grasp how fast the ground is moving under our feet right now.

Not in the vague “AI is important” sense. I mean at the level of how work actually gets done inside product teams. How ideas turn into software. How long that takes. Who’s involved. And where the real bottlenecks are starting to show up.

After a recent mastermind call with SaaS founders and operators who are actively navigating this shift, one thing became obvious very quickly: this isn’t a tooling upgrade. It’s a structural change in how software gets built. And it’s happening faster than mobile, faster than cloud, faster than SaaS ever did.

I said this on the call, and it frames everything that follows:

“This is a transition that's pretty much done by the end of this year... within 18 months, the new way, and I still agree with that timeline, within 6 months, if you're not doing AI-first coding development, you know, you're gonna fall way behind.”

That statement didn’t land as controversial in the room. It landed as familiar. Most people were already feeling it — even if they hadn’t fully articulated it yet.

The Resistance Is Real — and It Makes Sense

Almost every founder on the call shared the same story. Significant resistance from development teams when AI is introduced in a serious way.

Several SaaSRise members talked about developers being reluctant to use AI not just in core engineering work, but even in customer support workflows. The objections sounded reasonable on the surface: job security concerns, security concerns, enterprise compatibility, code quality, long-term maintainability.

Another founder in our community shared that while tools were technically rolled out, adoption stalled. Developers installed them, tried them once or twice, then quietly reverted back to old workflows. The trust just wasn’t there yet.

This reaction is completely human. Developers have spent years mastering their craft. When a new tool shows up that can suddenly generate code, refactor logic, or spin up working features in minutes, the instinctive response isn’t curiosity. It’s self-preservation.

That’s why one line from the call kept coming back up:

“The person who is convinced that something is impossible should never interrupt the person who is doing it.”

Right now, there’s a widening gap between teams philosophically debating whether AI should work and teams that are already shipping faster because they’ve accepted that it does.

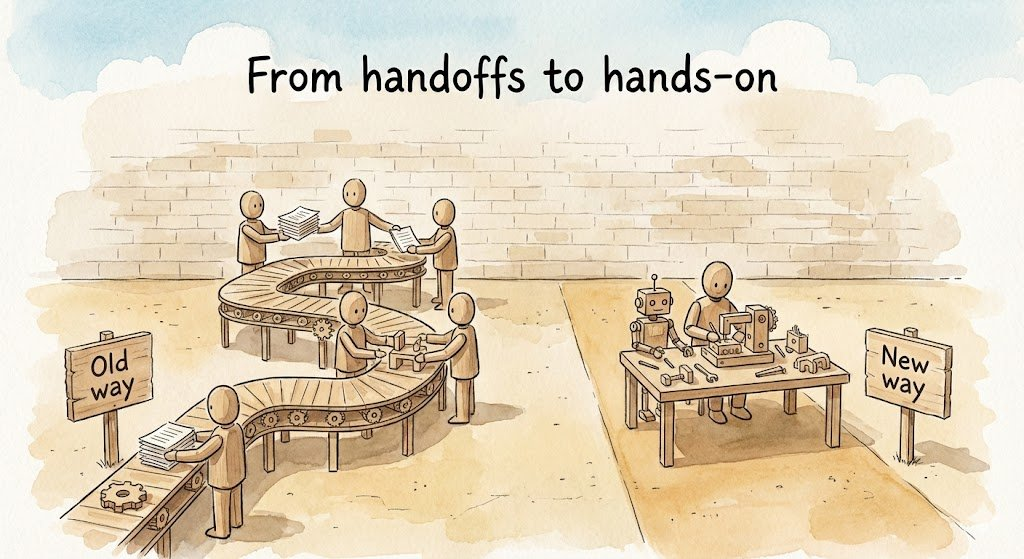

The Development Model Is Flipping — Quietly but Completely

One of the most important shifts discussed had nothing to do with specific tools. It had to do with who builds the first version.

The traditional model looked like this: product managers talked to customers, wrote specs, handed them to engineers, waited for questions, clarified, waited for implementation, reviewed, gave feedback, iterated. That cycle often took weeks or months.

What’s emerging instead is a very different pattern.

Product managers, founders, and business analysts — the people closest to the problem — are now using AI to build the first functional version themselves. Not wireframes. Not Figma mocks. Actual working software.

A mastermind call participant strongly advocated for this approach. Use AI for rapid prototyping to compress weeks or months of requirements gathering into days. Get something real on the screen. Click it. Break it. Improve it. Then hand it to senior engineers to make it scalable, secure, and production-ready.

This isn’t about cutting engineers out. It’s about moving their effort to where it creates the most leverage. Engineers stop being translators of intent and become collaborators on refinement, architecture, and production hardening.

Image from Saboo Shubham’s great recent post on AI PM

The PM Job Is Changing Faster Than Most PMs Realize

This shift doesn’t just change engineering. It fundamentally changes product management.

The PM job used to be translation. You talked to customers, synthesized their problems, wrote specs, and handed them to engineers. You were the bridge between “what people need” and “what gets built.” The value was in that translation layer.

That layer is compressing.

When agents can take a well-formed problem and produce working code, the PM’s role shifts. You’re no longer translating for engineers. You’re forming intent clearly enough that agents can act on it directly.

The spec is becoming the product.

Several PMs in our community described the same experience. What used to take weeks now takes hours. A PM writes a clear problem statement with constraints, points an agent at it, and reviews working code the same day. The time between “I know what we should build” and “here it is” has collapsed.

What’s interesting is that while implementation got easier, the work of knowing what to build didn’t. It actually became more important.

You don’t need to write the code yourself. You need to know what you want clearly enough that an agent can build it. The bottleneck isn’t implementation anymore. It’s clarity.

Speed Is Accelerating Everywhere, All at Once

Part of what’s making this shift feel disorienting is the pace of change across the entire AI ecosystem.

One participant shared that after just a few months inside a major AI organization, it felt like years’ worth of progress had shipped. New models, new agent frameworks, new APIs, new IDEs — all landing in rapid succession.

This isn’t unique to one company. Every large and small AI company is shipping at this pace because AI coding agents dramatically compress build cycles. The rhythms that used to define product development — quarterly planning, monthly sprints, weekly releases — are collapsing into something much closer to continuous deployment of ideas.

When implementation barriers drop this fast, the bottleneck shifts upstream.

The scarce resource is no longer engineering capacity. It’s knowing what’s actually worth building.

What Adoption Looks Like When It Actually Works

One founder in our community shared a concrete example of what successful adoption looks like — and it wasn’t gradual.

They ran full-day team training sessions where everyone set up the same AI coding tools at the same time. No optional pilots. No one experimenting quietly on the side. This was a coordinated shift in how the team worked.

Developers were taught to run two to four terminals simultaneously, which fundamentally changes how problems get solved. Instead of working linearly, teams start thinking in parallel streams.

To reduce emotional resistance, they gamified adoption. Hit AI usage limits, earn rewards. It sounds trivial, but it mattered. It made experimentation feel safe and collective rather than risky and isolating.

The result was striking:

“We are using our existing codebase... half of our development teams say that they don't even use their IDEs almost at all. Unless it's something that Claude can't solve, but turns out that's not that much for us.”

This wasn’t a six-month transformation. It took roughly three weeks of consistent daily use before the team stopped wanting to go back.

Champions Beat Mandates Every Time

Another strong pattern was how founders overcame skepticism without forcing it.

Several SaaSRise members emphasized finding AI-embracing contractors or developers and letting them demonstrate what’s possible. Developers trust peers who speak their language far more than they trust executive mandates.

One mastermind participant shared a moment where a business analyst built something in a single day that engineers had previously insisted wasn’t suitable for AI. That one demo didn’t just change opinions — it changed behavior. Senior developers immediately started learning the tools themselves.

Fear of being left behind turns out to be far more motivating than persuasion.

How Teams Are Using AI in Practice Today

This isn’t just about greenfield experiments. Teams are using AI inside real, messy, enterprise environments.

Some founders described using tools like Greta and Lovable to rapidly prototype interfaces and workflows, often without a dedicated product manager involved. These weren’t throwaway mocks. They became starting points for real features.

Others shared how AI is being used for code review and requirements documentation while still maintaining strict quality control for enterprise clients. The work doesn’t disappear — it gets compressed and elevated.

Across teams, a consistent pattern emerged: AI didn’t eliminate engineering work. It changed where human judgment mattered most.

The New PM Skillset: What Actually Matters Now

As the PM role shifts, a new skill hierarchy is emerging.

Problem shaping has become the core skill. Can you take an ambiguous customer pain point and shape it into something clear enough that an agent — or a team of agents — can act on it? Can you identify the constraints that actually matter? Can you articulate what success looks like?

Context curation is the next layer. The quality of what an agent produces is directly proportional to the context you feed it. Effective PMs now maintain context docs that include real users (not personas), direct customer language, examples of what “good” looks like, approaches that already failed, and the constraints that truly shape the solution.

Finally, evaluation and taste matter more than ever. Agents confidently produce things that look correct but miss the point. Knowing what’s “good enough to ship” versus “technically works” takes reps. There’s no shortcut.

The Mental Model Shift Founders and PMs Need to Make

The old instinct was to eliminate ambiguity as fast as possible. Lock the spec. Minimize rework.

The new instinct is to hold ambiguity longer. Use agents to explore the solution space quickly and cheaply. Let working software teach you what the real problem is before committing.

You don’t need perfect clarity upfront anymore. You need momentum, feedback, and judgment.

Once you experience that loop — build, test, react, iterate in hours instead of weeks — it’s very hard to go back.

The Timeline Is Shorter Than Most People Want to Admit

This transition is happening faster than mobile ever did between 2005 and 2015. Entire legacy systems that used to take years to rewrite can now be rebuilt in under a year.

I said this plainly on the call:

“You could rebuild all of FriendBuy in 9 months using these new tools. Like, the whole thing, you could rebuild in 9 months... 3 million lines of code. You could rebuild all of MailChimp. You could build an entire CRM system in 9 months.”

That’s not a future prediction. It’s an observation about what’s already possible if you organize correctly.

One founder captured the emotional reality perfectly:

“I wake up some day thinking we got 6 to 8 hours to figure out the new rev on all this stuff.”

And here’s the uncomfortable truth founders need to internalize:

“If you're not ahead of this, um, you know, you won't be in business 36 months from now. So, we owe it to ourselves as owners to get ahead of this and keep your legacy stack in production, but hire someone to rebuild it with these new tools.”

The winning strategy isn’t ripping everything out tomorrow. It’s running two tracks at once: keeping the current system alive while aggressively rebuilding the future in parallel.

Final Thought

If your job was mostly translating customer needs into documents for engineers, that workflow will be automated.

If your job was understanding problems so deeply that the right solution becomes obvious, you’re more valuable than ever. Agents amplify that understanding into shipped product faster than any team ever could before.

The translation layer is disappearing. What’s left is everything that actually mattered: user empathy, judgment, and taste.

This revolution isn’t coming. It’s already here. The only real question is whether you reorganize fast enough to take advantage of it — or spend the next year explaining why you didn’t.