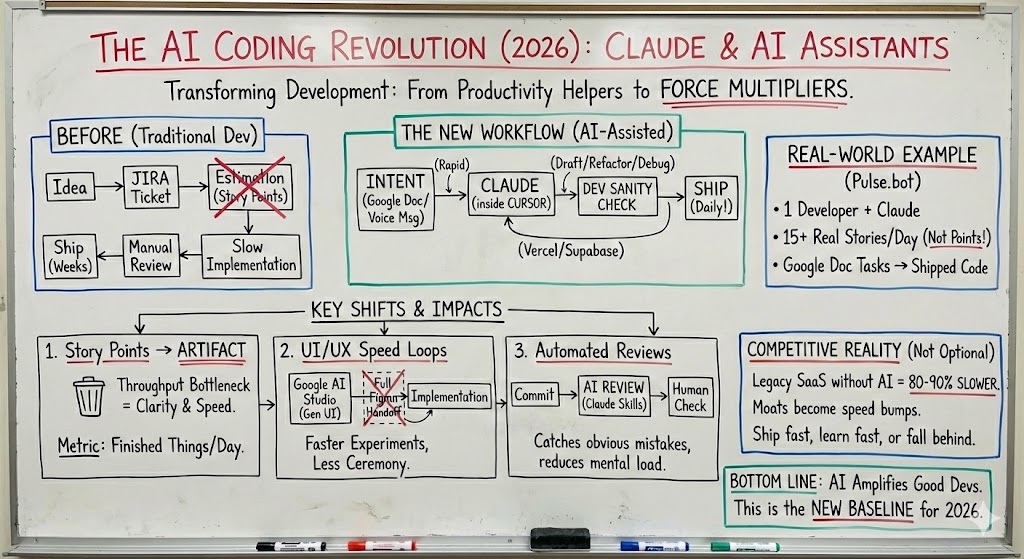

The AI Coding Revolution: How Claude and AI Assistants Are Transforming Software Development in 2026

Something fundamental has shifted in software development over the last 12–18 months. Not incrementally. Not “nice to have.” Fundamentally. AI coding tools—especially Claude paired with environments like Cursor—have crossed a threshold where they’re no longer productivity helpers. They’re force multipliers. And if you’re building software in 2026 and not deeply using them, you’re already falling behind. I don’t say that lightly. I’m watching this happen in real companies, shipping real features, with fewer people than “best practices” would ever recommend.

What This Looks Like in Practice (Not Theory)

Let me start with something concrete.

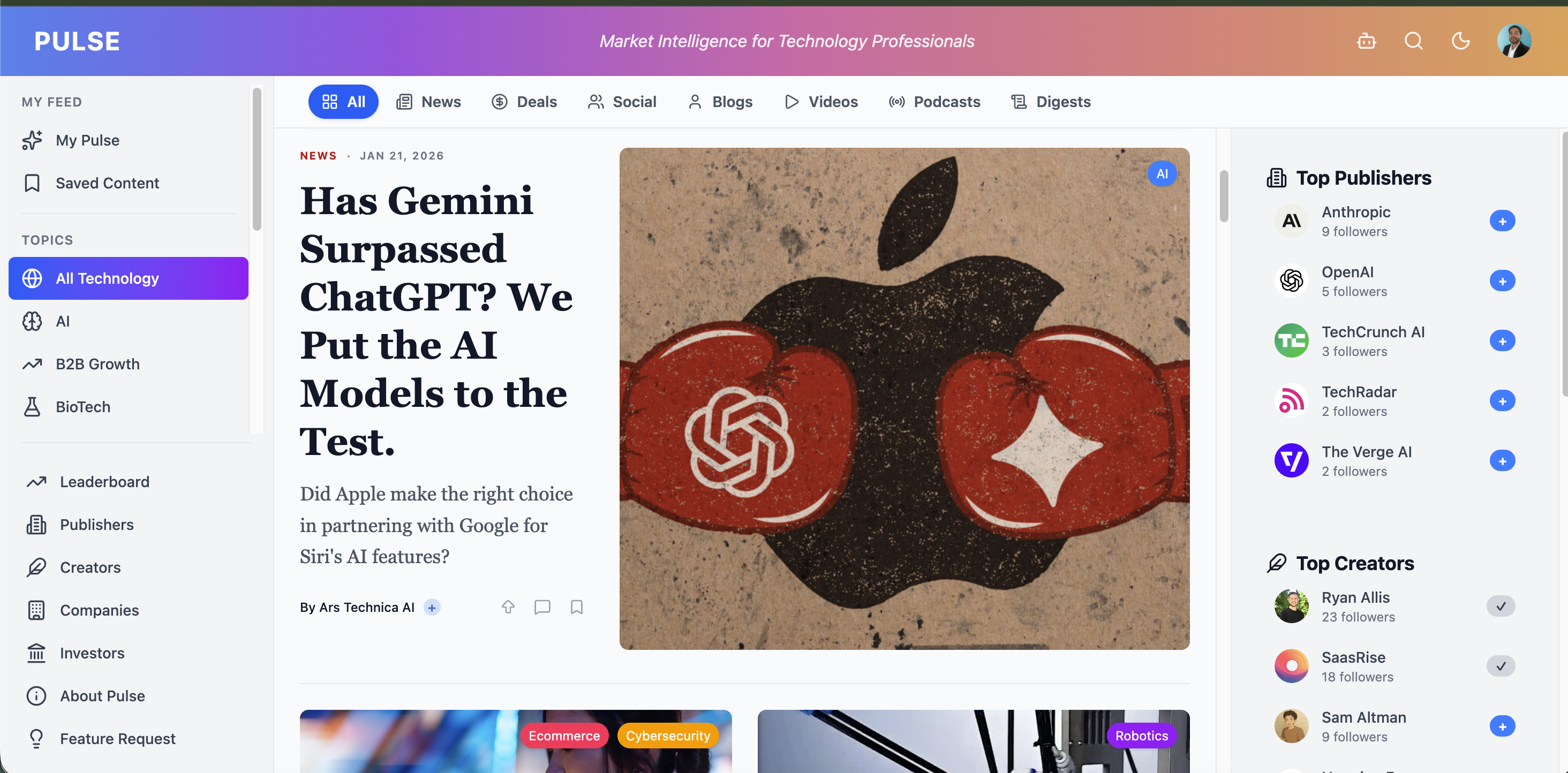

We’re building Pulse — and you can literally go see the product at www.pulse.bot (Pulse) — with one developer. Not a large team. Not squads. One strong developer, working inside Cursor, using Claude Code as the primary collaborator, and shipping on a modern stack: Vercel for deployment and Supabase for the database.

The output is… honestly absurd compared to traditional software teams.

“He’s getting through about 15 individual stories per day. The quality is very high. This is not exactly what I would call development best practices, but for a one-person shop, it’s literally a Google Doc. Here’s a screenshot, here’s what to do. And then he’ll cross off about 15 of these stories a day.”

— Ryan Allis

That’s not a typo. Fifteen real stories per day. Not “points.” Actual shipped functionality.

We’re not living in JIRA. We’re not doing three ceremonies per week to talk about work instead of doing it. We keep a Google Doc, drop tasks in, and burn through them.

And yes, some Agile purists will cringe. But the output speaks louder than the process.

Story Points Are Becoming an Artifact of a Slower Era

One of the quiet casualties of AI-assisted development is story points.

Story points made sense in a world where human throughput was the bottleneck and estimating effort was a necessary evil. They make less sense when the biggest constraint isn’t implementation time — it’s clarity of intent and speed of iteration.

“Story points are sort of an ancient relic, at least in our world right now. The number of stories that he’s getting through—forget story points—he’s getting through about 15 individual stories per day using Claude Code within Cursor.”

— Ryan Allis

When the unit of progress becomes “finished things per day,” story points stop being helpful. What matters now is: how quickly can you turn a product idea into working code, get it deployed, and learn from reality?

AI coding changes the answer.

The New Workflow: Intent → Code (Almost Instantly)

Here’s what’s really going on behind the scenes: Claude isn’t acting like autocomplete anymore. It’s acting like a coding partner that can draft, refactor, debug, and explain — rapidly — while your developer stays in the driver’s seat.

In the Pulse workflow, it often looks like this:

Developer writes a task (or drops a screenshot + quick description) → Claude generates the implementation → developer sanity-checks it → the change ships.

And we’re not doing this in some chaotic “YOLO production” way. The key is that Claude makes the boring and time-consuming parts radically cheaper: scaffolding, plumbing, repetitive patterns, refactors, first-pass debugging, and documentation.

A Hot Take: Figma Is Becoming Optional in Some Loops

This one will annoy designers, but it’s increasingly true in early-stage product cycles.

When you can use tools like Google AI Studio to quickly generate, iterate, and refine UI directions (and then move straight into implementation), you don’t always need to do the classic “full fidelity Figma → handoff → build” workflow for every feature.

Figma isn’t dead. But in a lot of fast-moving product teams, UI/UX iteration is moving closer to code — faster loops, more experiments, more shipping, less ceremony.

Getting Developers “Addicted” (In a Good Way)

One of the smartest things I’ve seen recently was how Cristian got his dev team onboarded.

He didn’t do the cautious “let’s try it for a sprint” thing. He went full immersion.

“I managed to get them addicted to Claude Code. I made them pull up four terminals and use them simultaneously, and give them basically unlimited inference and unlimited usage. Right now, as soon as I have an idea, I open up my Claude Code terminal. I send a voice message to Claude Code, and that skill is able to write a full task description. It goes straight to ClickUp, our project management software.”

— Cristian Frunze

That workflow is the point. Thought → voice → structured task → execution. The distance between idea and shipping collapses.

And once devs experience that loop, it’s hard to go back to “write a ticket, wait for grooming, estimate points, implement slowly.”

Automated Code Reviews Are Becoming Normal

Another underrated shift: AI isn’t just writing code. It’s reviewing it.

Cristian’s team is running automated Claude-based code reviews on every commit.

“We do code reviews using automatic Claude Code skills. On every commit, a Claude Code instance automatically opens up and reviews the code before the commit. My developer says it catches most stuff.”

— Cristian Frunze

This doesn’t eliminate human review — and it shouldn’t. But it reduces the mental load and catches a lot of the obvious mistakes before a human even has to context-switch.

It’s leverage. And it compounds over hundreds or thousands of commits.

This Isn’t Just for Greenfield — It Works on Legacy Too

A common misconception is that AI coding only works on fresh projects with clean architecture. That’s wrong.

Teams are using Claude to refactor and modernize massive codebases — hundreds of thousands of lines — because AI is unusually good at the kind of work humans hate: making consistent changes across many files, updating patterns, finding edge-case breakage, and rewriting parts of systems without losing functionality.

Is it perfect? No. But it’s good enough that it changes what’s feasible, and how fast you can do it.

The Latest Claude Model Matters

Model quality matters a lot here, because coding isn’t just “generate text.” It’s reasoning, dependency tracing, and understanding what you actually meant.

Anthropic’s current newest model, Claude Opus 4.5, is positioned specifically as their top model for coding, agents, and computer use.

If you’re serious about AI coding, use the best model you can afford. The delta between “pretty good” and “actually reliable” shows up as fewer bugs, fewer iterations, and less developer skepticism.

The Competitive Reality: This Is Not Optional

I’m going to be direct, because founders need direct.

“Every single legacy SaaS app is going to be completely disrupted in the next 36 months. If they don’t redesign and rebuild their product using these tools, their speed of development will be about 80-90% slower than the companies that have rebuilt their platform based on these tools. Ultimately, you won’t be able to compete beyond another 24 months.”

— Ryan Allis

This isn’t moralizing. It’s math.

If your competitor ships 5–10x faster than you, your “moat” turns into a speed bump. Features converge. UX catches up. Pricing pressure increases. And the market moves on.

In a lot of categories (especially non-enterprise), speed matters more than IP paranoia. If you can ship fast, iterate fast, and learn fast, you win.

The Bottom Line

AI coding assistants like Claude have already changed software development. The question isn’t “will this happen?” It’s “are you adopting it fast enough that you aren’t left behind?”

Pulse is an example of what’s possible when you fully lean in — again, you can see it live at www.pulse.bot. One developer, Claude Code inside Cursor, deployed on Vercel, data in Supabase, and UI iteration increasingly accelerated by tools like Google AI Studio.

AI hasn’t replaced good developers. It has amplified them.

If you’re building software in 2026, you should assume this is the new baseline. Anything less is competing with one hand tied behind your back.